It’s always good to think about the architecture of a project before you start. This may seem like common sense but it's easy to get tangled up in a requirements gathering process or negotiating legals and the big thinking gets forgotten.

Once a design is in place and the project moves forward, continual reviews should be scheduled to ensure the implementation adheres to intended design. During the early stages of such reviews, especially in greenfield applications, it is imperative that the architecture is evaluated with a flexible, adaptive mindset; the last thing a project needs is restrictions on the potential of a system simply because an architect is not change-adept.

What is software architecture?

To paraphrase from Wikipedia: “Software architecture refers to the high level structures of a software system, the discipline of creating such structures, and the documentation of these structures. The architecture of a software system is a metaphor, analogous to the architecture of a building”.

Why is it important?

If the architecture is fundamentally flawed, any application built on top of it is doomed to fall short of its potential leaving little chance that key qualities exist; namely, a system that is robust, scalable, resilient and performant. Programming out of a bad architecture is near on impossible and re-writing the architecture once an application has been implemented becomes very costly.

"If the architecture is fundamentally flawed, any application built on top of it is doomed to fall short of its potential."

Why is it so difficult?

I think Douglas Horton said it best with this quote - “The art of simplicity is a puzzle of complexity”. Apart from key characteristics mentioned above, a goal of successful architectures is simplicity without oversimplification. The intricacies for any architect is keeping the design simple, not compromising quality, and meeting all requirements for the business while still providing a foundation for innovation as the business grows.

Blindly following concepts that adhere to theoretical best practice leads to issues. There are a fair few purist approaches recommended in textbooks and preached about in online blogs insisting that their recommended concepts are the only worth consideration. Be wary of implementations that are vigorously insisted upon in such a way, they are usually conceptualised by PHD academics that have never implemented systems in the real world.

How do you know we’re not living in an ivory tower?

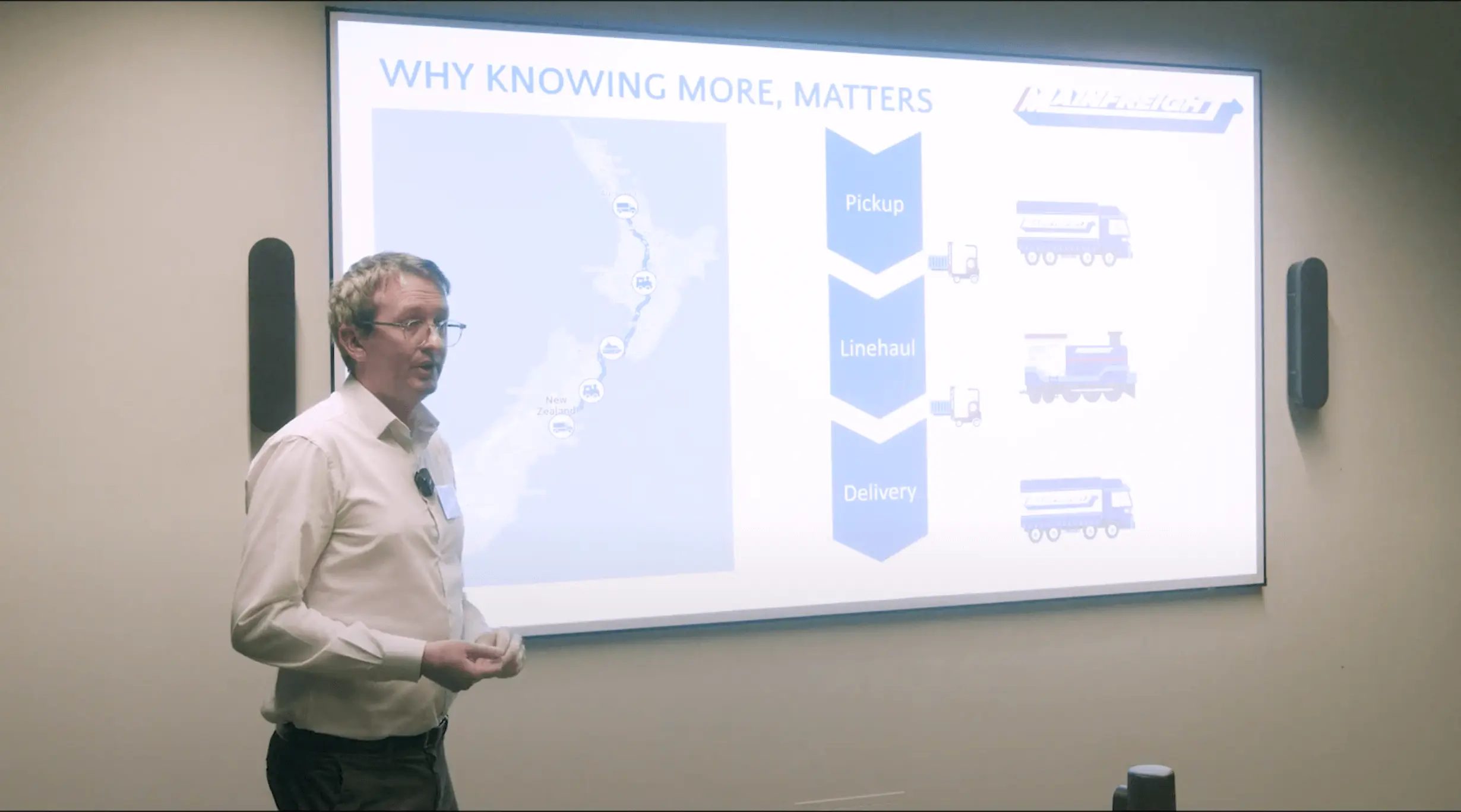

Maintrak, a 25 year old freight management system built for Mainfreight, is testament to a system built on top of a solid architecture. This was achieved by constant decision making as the system evolved over time, debating different approaches and making the right long term calls. The design today is pretty similar to that which existed when we first went live, it has survived multiple technology refreshes and it has kept up with the pace of Mainfreight’s business which is considerably larger than when the system was initially created.

Give me an example!

To get a little technical, I’ll refer to a choice between normalising or denormalising database structures with examples of things to watch out for. Normalisation can mean that numerous tables are required to generate information pertinent to specific functionality that require complex calculations which can lead to performance issues; reporting would be one example where this is likely. Denormalisation has the issue of keeping all details in sync across all tables that hold such data. Both have trade offs and there’s really no hard and fast rule that works for all scenarios.

Tracking references in one of our freight systems is an example where we’ve used a normalised approach for a few reasons. We store all references for a consignment in one table that has a foreign key to the consignment/shipment (connote), a reference column for the actual reference and a foreign key to indicate what type of reference that reference is. This provides us with a table that stores all references once, and allows us to place an index on the reference column allowing quick retrieval of any associated object across a vast number of records.

Many of our reports run off specifically designed denormalised tables for the sole purpose of speed. The population of these tables is powered by database calls that are executed once transactional tables have been updated in an asynchronous approach to provide a quick response for the user who has committed the data change or creation.

Our vision

Here at Sandfield we aspire to build custom solutions to a package quality that evolve and grow over the long term. The unseen structure that achieves these goals is a great architecture. We draw on experience and pragmatic judgement rather than adhering to the latest theoretical best practice or fad methodology.